This 1-hour-20-minute video, part of our Data Mining Series, discusses the entire lifecycle of a Data Mining Model. You will learn how to build models and mining structures, starting by creating a Data Source and Data Source View, how to train it with your data, and how to view the results. Most importantly, you will also understand how to verify a model’s validity, by applying tests of accuracy, reliability, and usefulness. You will understand, and you will also see being used, such key verification techniques as: a Lift Chart, Profit Chart, Classification Matrix, and Cross Validation. Finally, you will see how to predict unknown outcomes using your model.

We begin by introducing a well-known process methodology for data mining, known as CRISP-DM. The key to any data mining project is the understanding of its iterative nature: to gain a better business understanding you explore the meaning of your data, using mining models, and you necessarily modify those models, to get better, more accurate results. However, in the process of doing that, you change your understanding of the original issue, that you wanted to resolve, and the process continues. It is a loop, one that rewards you with intelligence, which helps you improve your organisation.

There are five stages in a data mining model’s lifecycle: Model Definition, Training, Testing, Use (Scoring), and Update, and we discuss those in detail, starting with Defining a Model. The main model definition concepts, such as Cases, Keys, Column Data and Content Types, Usage, Discretization and so on, have been introduced in another module of this course, Data Mining Concepts and Tools, please make sure to follow it, if you are new to data mining. As there are several ways to build models, we briefly discuss the merits of using SQL Server Data Tools (SSDT), which are more suited to project-focused work, where you return to the same model over a period of time, perfecting it, as opposed to working entirely within Microsoft Excel, which is simpler, and easier to use for a more casual data miner. In addition, you will hear, very briefly, about using PMML (Predictive Model Markup Language) and DMX (Data Mining Extensions) as alternative ways to define models.

Time to build a model! In the first series of demos you will see a Data Source and a Data Source View being created, so that we can use a simple, flat table of so-called customer signatures, which contains their demographic characteristics, such as age, occupation, and income, together with the known predictable outcomes, in our case the number of purchases each customer has made in our store, which we wish to study by building a mining model. Although our table comes from SQL Server, there is absolutely no limit where your data should be stored. You can use any database, spreadsheet, or even a text file. While you use SQL Server Analysis Services (SSAS) Multidimensional and Data Mining engine for analysis of this data, you are free to use any source for your data. The next step, creating a Mining Structure, together with a Mining Model, is the heart of the mining process. Once defined, a mining model needs to be trained using your data. You will see how we train our model, and how we visualise the results using a Decision Tree viewer. A common question is asked about the amount of necessary data for training. As Rafal explains, there are no fixed rules on a specific number of cases, however there are qualitative rules about the validity of the model, that must be fulfilled, which are often related to the amount of training data.

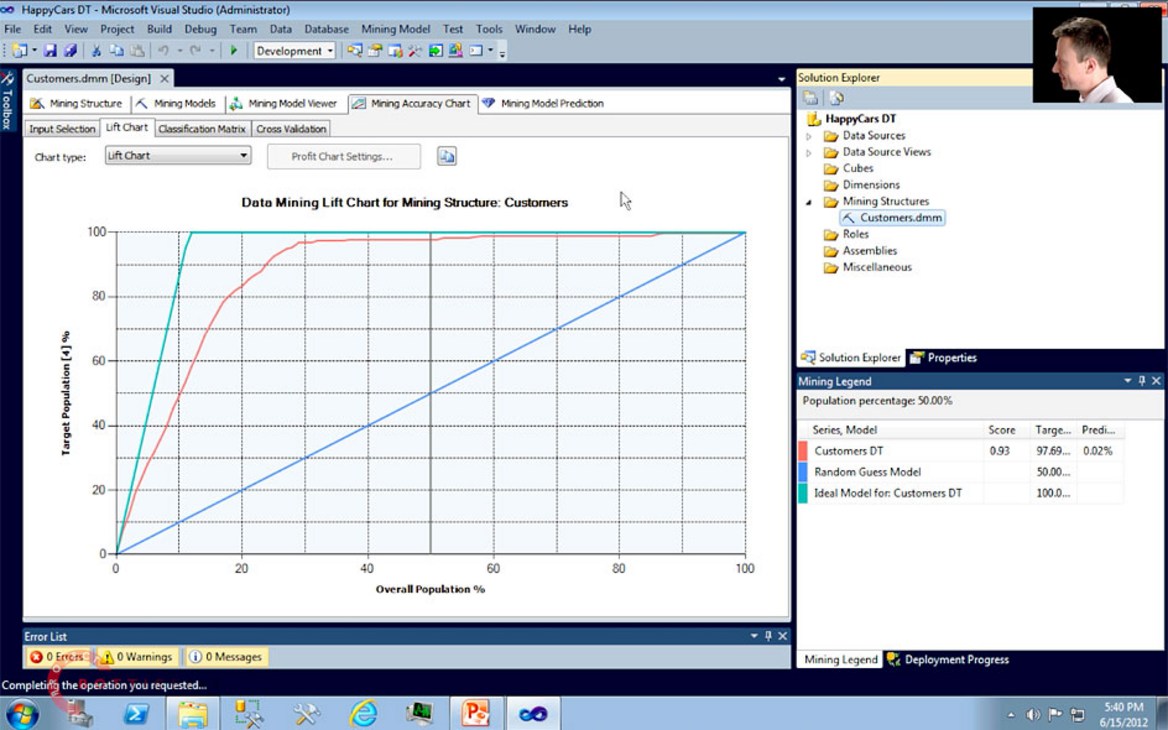

Testing and validation of models is of paramount importance. After all, what’s good of a working model if it cannot be trusted? You will hear an extensive explanation of testing of Model Accuracy, Reliability, and Usefulness, just before you see it being done on our model. The demo explains, and shows, at the same time, those most important validation techniques, including: Lift Charts, Profit Charts, Classification Matrices, and finally, Cross Validation for reliability testing.

If your model passes those tests, you may want to use it for making predictions about the unknown, by applying it to data on which you may want to perform predictions, which is sometimes called Model Scoring, or scoring data with a model. This is shown in two demos, both achieving the same results. First, using SSDT, and later in a simpler, but equally powerful way, using Excel, you will see how we use our just-created, and validated, model of customers’ characteristics to predict the most likely numbers of purchases that future, potential customers might make. This is a very common usage scenario, as you might want to apply a marketing list, and a mining model, to discover which customers are most likely to be interested in your products, and even to find out what is their profit potential to your company, impact on your credit, possibility of churn, or even risk of fraud.

If you would like to follow the demos, shown in this video, you will need access to a working installation of SQL Server Analysis Services (2012+, 2017 works well) and the database engine—get a trial if you don’t have it, or the free developer edition. You should also have access to Microsoft Office Excel 2013+ (2016 works well) and the free Data Mining Add-Ins, which come with its own mining data sample in an Excel workbook. You should also use Adventure Works DW datasets available from GitHub, and you may want to use our own dataset, HappyCars, which is available for download from here.